SparkLing- a platform enabling early childhood Instructors to teach a 2nd language

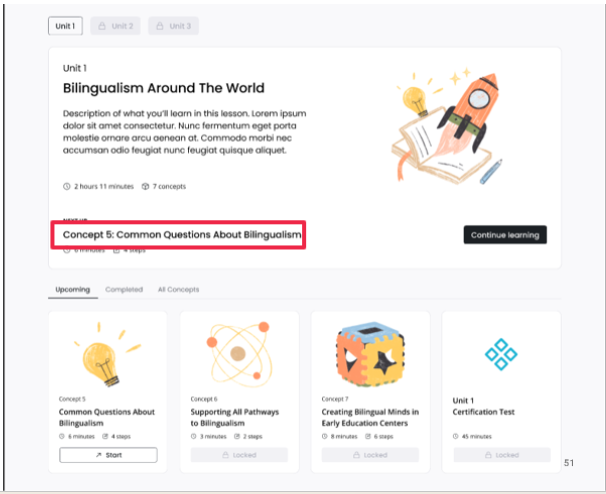

I led research to complement designers to iterate mid-fi designs on the left to the final designs on the right. This is a view of the dashboard.

Overview

Contributions: Senior UX Researcher and Service Designer

Collaborators: Product Owners- Research Scientists, Philanthropic Funders, Senior Designers, Senior Developers, Vice President of Product

Timeline: 11/16/23-3/25/24

Introduction

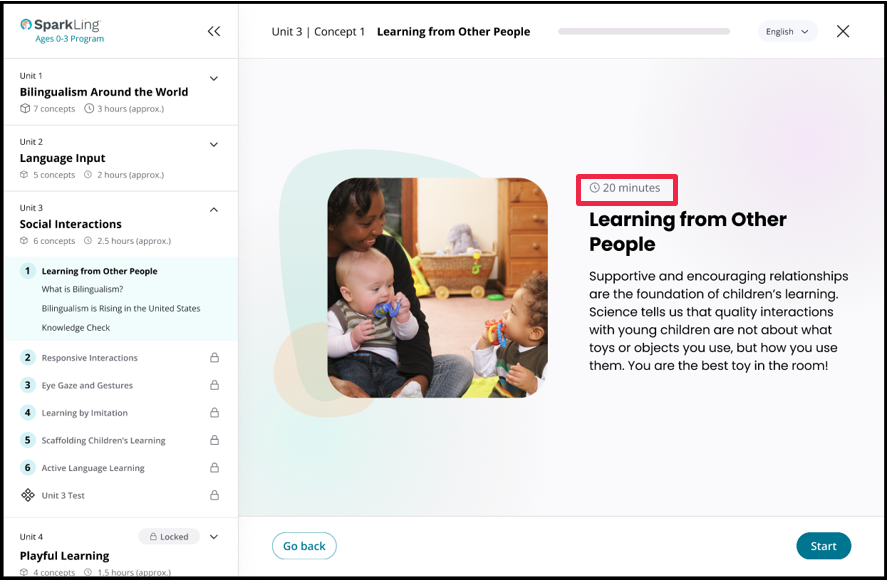

SparkLing is a platform that teaches early childhood educators to shape and teach second language skills with early learners. Once teachers prove they have learned the methodologies, they can gain certification in teaching a scientifically validated and evidence-based curricula.

From SparkLing 4/25

My Contribution and Process

I led design and usability research to take SparkLing from lo-fidelity prototypes to launch. In order to accomplish this I led stakeholders to:

Negotiate the scope and timeline of the project

Test the site navigation

Test training interactions

Test real-world implications

Negotiating Scope

Stakeholder Buy-In and Alignment

For this project my clients via Optimistic, were from University of Washington ILABS. These are very serious research scientists who lead and teach at the cutting edge of Linguistics, Neurology, Learning, and Medical application.

They wanted to test valid questions for this project. However, product testing is a bit different.

I needed to tactfully communicate:

The need to rapidly test in order to align our development timeline

The value of testing with just the right number of participants for usability testing in lieu of statistically significant testing

The value of a variety of different product testing methodologies including some quantitative and qualitative methodologies

Limitations of my ability to test instructional methodologies for effective learning, while advocating for an audit from a Learning Experience Designer

By communicating opportunities and limitations, we were able to audit materials with instructional designers and develop research methodologies to fuel rapid iterations.

Here is a high-level overview of the scope negotiation process.

Research sprints

After onboarding, reviewing the platform prototype and documentation about the learning methodology- I negotiated and shared out the renegotiated scope of the research sprints with stakeholders.

Our Research Participants

I would be testing this platform with bilingual teachers- many whose second language was English. I had to produce two different guides for the first two rounds to accommodate for language needs.

Research Round 1- Testing Site Navigation

Round 1 Objective

Round 1 Tested

Gain a deeper understanding of organizational and teachers' workflows, needs and preferences to identify iterations to better reflect instructors' mental models.

1. Information Architecture

2. Dashboard and Overview/Landing Page

3. Language Accessibility

4. Mental Models

Methods

Participants

Information Architecture Audit and Tree Jack Testing to evaluate and simplify navigation flows.

Task-based usability testing with probes to evaluate feature and interaction usability.

Interviews covering ethnographic and process related questions to garner information on organizational context and broader contextual workflows.

8 Early childhood Educators

4 States (FL, AZ, TX, KC)

3 Different predominant languages spoken

Primary devices for trainings include: laptops, tablets, phones

Data Synthesis

I shared out findings as I synthesized on Miro with Designers and in formal presentations with the product owners.

Insights

Effective trainings leave a lasting impression when they are practical, adaptable, and reinforce existing practices.

Early Childhood Teachers face challenges as they complete trainings during working hours in unpredictable and dynamic classroom environments.

For teachers to support student progress, adapting lesson plans requires time, motivation, and analysis.

After completing trainings, teachers want to assess their comprehension as they apply learned skills.

“I will go first, like to my coworkers… and, you know, ask… What can I do? Can you help me? And…we go, you know, to our director and ask like, “Hey, what do you think it will be better? Or you know I can even go ask to a Pre K, teacher or preschool teacher, because even that their tools we are always looking for them to be, you know, mature and independent enough to, you know, challenge them on their activities.” -Participant 3

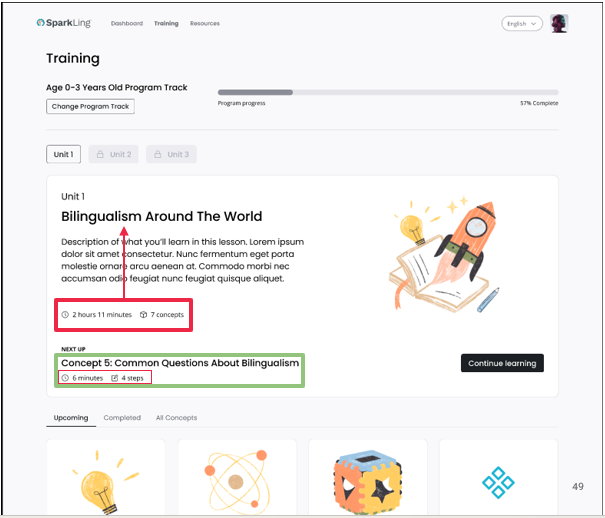

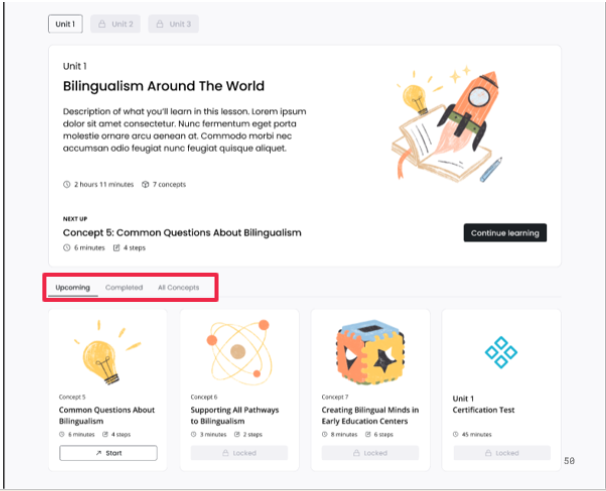

Recommendations

Refine some of the language, such as "track" and restate some of the logic in feedback when teachers answer correctly.

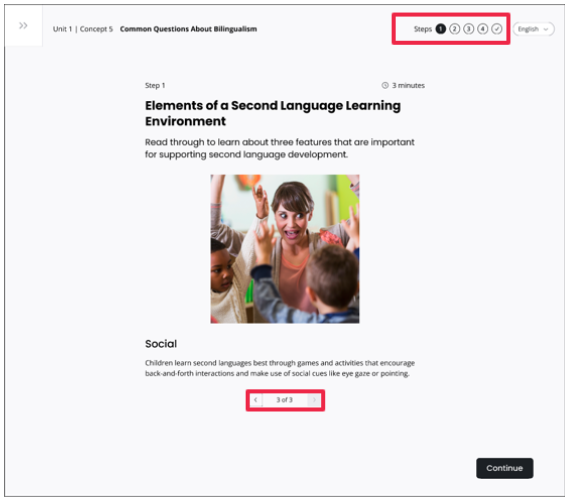

Clarify the progress tracker to map to the course or the unit.

Use more font variation and more consistent chunking to improve learnability of the platform .

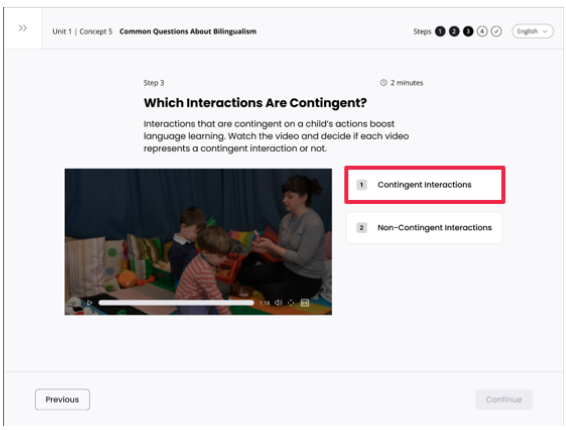

Simplify the training mode interactions by reducing information about progress within a concept.

Research round 2- Testing Training Interactions

Round 2 Objective

Round 2 Tested

To evaluate the lesson Plan Page, Onboarding Flow, and Interactions to improve usability to reflect teacher expectations.

1. Teachers' planning processes

2. The perceived value of a lesson plan preview page

3. Onboarding flow for the lesson plan page

Methods

Participants

Semi-structured interview to learn about lesson planning processes.

Task-based usability testing with probes to test onboarding wizard and lesson planning page.

Surveys to understand post-lesson plan page perceived value.

7 Early Childhood Educators

4 States (TX, WA, OR, FL)

Data Synthesis

Insights

Teachers use data based on age, engagement, learning goals, IEPs and IFSPs, and Mood to optimize lesson plans.

Teachers calibrate their lessons to target the 5 domains from the NAEYC because they have to negotiate with parents about goals.

Teacher workflows change quickly, and lesson plans should be adaptable to this dynamic.

I have a lot of parents who are tiger parents, and they just want to know how their kids will be ready for kindergarten. I’d love to have some kind of cheat sheet where it’s mapped out… what are the skills that you’re striving for and where are the coming from?... You might have to defend what you’re doing”- Participant 5

Recommendations

Consider testing with students with special needs to better understand how this might impact and IEP or IFSP.

Determine how to frame marketing about SparkLing in alignment with NAEYC domains as these are often the national standard.

Reflect other routines that may not be accounted for in the resources such as potty training, eating, etc.

Research round 3- Defining Real World Implications

Round 3 Objective

Round 3 Tested

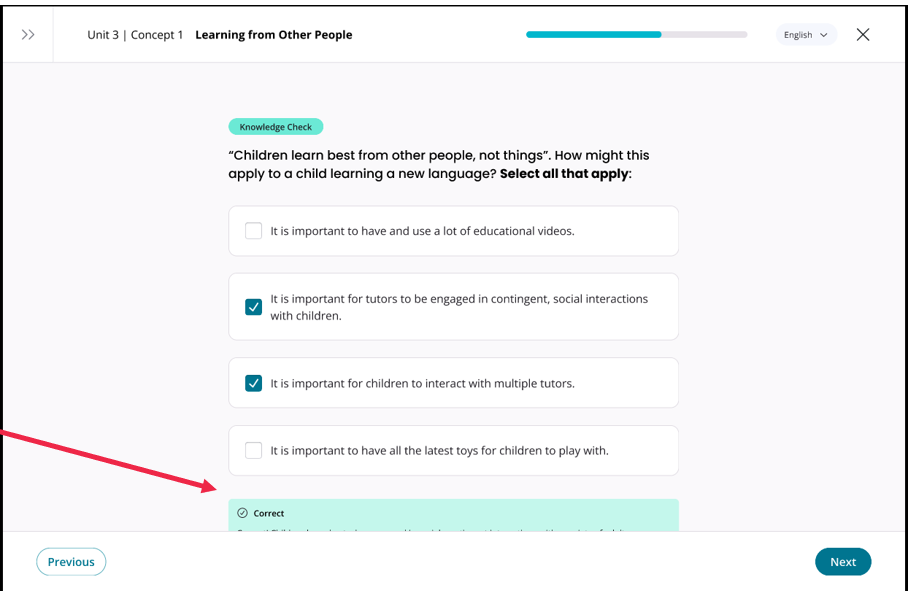

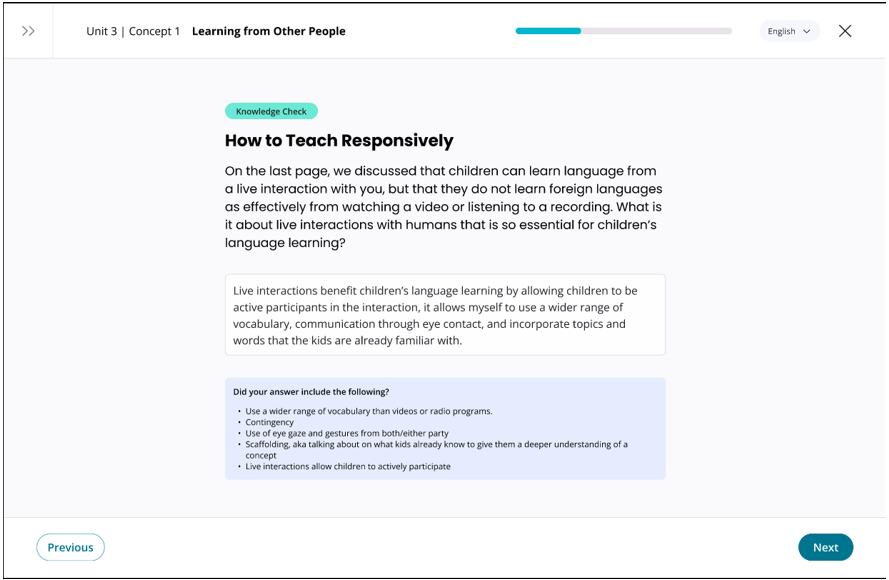

To evaluate an end-to-end "concept" (or module) within a lesson, novel question types, and to gather key metrics to understand SparkLing's current performance, teacher expectations, and potential for growth and scaling.

1. End-to-end concept

2. Open-ended question type to understand expectations for feedback and current design experience

3. Key performance metrics and indicators for scaling and growth

Methods

Participants

Time on task to evaluate the time required to complete an end to concept

Task based observation with probes to understand usability snafus.

Survey to collect key metrics such as NPS and satisfaction ratings.

Three-word exercise to gather affective impressions of a module.

6 participants

4 different early childhood education centers

3 States (TX, WA, AZ)

Data Synthesis

Insights

Teachers liked the overall flow of information, visuals, and review because it matched their expectations.

The amount of content and reading was overwhelming and needs additional tooling to support a broader subset of teacher and usability needs.

NPS and satisfaction ratings were strong because of the human-student interaction.

“I feel like it was really cohesive because you took a general idea, and then gave examples and then like a knowledge check… The flow of it was really good cause you took all that information and weaved it into the next slide…” -Participant 1

Recommendations

Incorporate transcription or audio with reading when feasible to support second language accessibility.

Use language that demonstrates timings were estimated time to complete.

Support learning by detailing noteworthy concepts to help with open-ended questions and detail expected response length (e.g. "In no more than 500 words...").

When giving feedback on answers, ensure it's not hidden beneath the navigation bar. If it's for incorrect answers, link back to pertinent information, encourage correction, and use consistent formatting and accessible language in definition bullets.

Locked, Loaded, Launched

This project took SparkLing from low-fidelity wireframes to launch. This was used in beta testing and is now developed and used in the real-world in partnership with a large early childhood education service provider and available to other providers as well!

Though the training requires purchase and login, you can see the landing page here.

New video walkthrough is forthcoming.

Learnings

Though this is a recurring theme throughout my time as a Design and Usability Researcher, this project solidified my skills in client communication. I acted as a lead Design researcher for this project with no direct reports other than clients. My collaboration with Design, product owners, developers and the VP of Product was paramount to ensure my deliverables triangulated to support their needs.

Takeaways

Always discuss the scope of the project proactively and clearly. Re-negotiate scope when needed to align expectations.

Sprint goals are defined by cascading product goals, design and development deadlines, and the ability of usability testing to complement these goals.

Bring stakeholders together. There was some friction at the beginning of the project because more clarity was needed to understand design, development and research processes. I planned meetings with product owners and design for reviews and worked with design and development on the back end to ensure we met our milestones.